How to Perform a Website Audit (and find the holes in your funnel)

So your site’s not performing the way you need it to. You *know* it’s capable of more, but you can’t find the dirty little gremlins in the experience to fix them. The first step to finding the problem is to go back to first principles and do a full experience audit to figure it out. Below is a generic guide of how to perform that audit (that you will obviously need to tailor to your specific site in ways only you will know).

If you have the time and manpower, I recommend that multiple people perform this audit independent of each other and compare notes. You’re much more likely to get the whole picture. But if not, one will do.

Objective: Use this exercise to identify all the places where you expect one thing but find it’s not aligning with reality, and then ask yourself why that is, and investigate it. Make special note of any findings that hit you as strange, unexpected, poor performance, or annoying. Fix what’s broken and make a list of PLACES in the experience you want to do tests in (we’ll get to how to develop the test list and prioritize the backlog in another post).

Step 1: The State of the State:

Reconnect with your business, your audience, and the market in general. This is a brush-up, in case you’re not already doing it regularly. But when things go south or aren’t behaving the way you think they should, this is where to start.

Where are you in the cycle of your business? Most businesses have up and down cycles—no one is gangbusters all the time, not even Amazon. (See: Amazon created Prime Day in July to increase sluggish sales at a time when everyone is on vacation and not really interested in buying anything.) Whether it’s seasonal, yearly, quarterly, based on the stock market or consumer habits, there’s a cycle, and you know it. My guess is that if you’re here, you’re seeing alarming non-cyclic trends, but market trends always have SOME effect, so understand what that effect should be and account for it.

What is the state of your market/audience? Motivated, wary, exhausted, hungry, overwhelmed, worried, etc.? Whoever you’re trying to reach, they are (as a group) affected by the forces of their lives in fairly predictable, documented, specific ways. When I worked with real estate and the Fed started upping interest rates, it affected all real estate—different impacts between companies, but it affected them all nonetheless.

SWOT analysis. I’m sure you’ve done this analysis before, but it’s a useful one to do as often as needed, especially when you’re in a slump. In the current time, what are your strengths, weaknesses, opportunities, and threats?

Is there someone out there eating your lunch? How can you take it back?

Can you eat someone else’s lunch?

Is there an elephant-in-the-room type of challenge you’ve been trying to avoid doing something about but you know is eating into your metrics in some way?

Is your audience really hungry for a better way, a better solution, that no one has had the guts to do yet?

Put yourself in your customers’ shoes. What would help YOU and motivate you to engage with your business? What about you stands out and really addresses their needs and concerns?

Step 2: Experience Walkthrough:

Next, go back to basics and experience your entire site and process as your audience does.

Test all your CTAs. Test every single one of your buttons/primary goal actions. (What do I know? You could care more about article clicks and session duration than purchases or leads—you do you.) My point is, whatever your primary goal is, test the connection between that action on the site and how it’s recorded in your source of truth. Make sure it’s being recorded and processed correctly.

I cannot stress this enough. Until you are absolutely SURE everything is working the way it’s supposed to work, stay on this step. I don’t care how tedious or time consuming it is. MAKE. SURE. If I had a dollar every time I’ve done one of these audits and found a broken connection somewhere and heard “I was SURE that wasn’t true!” I wouldn’t be here.

How are people getting to the site/app?

What channels do they use? Social media, paid or organic search, display ads?

What page(s) do they enter on? What information do they have about the site’s purpose and where they are in the path from those entry points?

What are the demographics of each traffic source? What is your hypothesis about why these people are converting or not?

Check your experience on all primary devices. Don’t rely on simulators for this step—actually review your site on your phone and tablet. You’ll be shocked at the difference between a simulator and an actual device. (I have an older iPhone SE with a tiny screen and the developers I have worked with are forever bemoaning that their beautiful experiences simply DO NOT show up well on this phone.)

How is the experience on all these devices when they’re not on WiFi? What’s the load time?

What are the primary use cases for the site? List all the major reasons why someone would come to your website and review how they achieve that goal. Count the steps they have to take to get there.

Which use cases have the least and most steps?

The highest and lowest conversion?

Identify the happy paths: the paths with the fewest and easiest steps. These SHOULD be your highest converting paths and use cases, and if they’re not, really dig into why.

Identify the UNhappy paths: the paths with more and the most tedious steps. (Hint: these will be great to test later.)

Review heatmaps of your primary pages/page types. Check where people are clicking, looking, scroll depth, click areas. Both on mobile and desktop.

Review session recordings to see where people are experiencing trouble with your site and funnel. HotJar and Clarity both have a dashboard to review any Javascript errors, quick-backs, and dead link clicks.

A QUICK CAUTIONARY TALE:

I once performed a manual audit like this at the same time the development team executed the automated test of the same CTAs. My boss objected: “The QA team is on it—why are you doing it, too? They can do it faster.” My answer: “They’re testing to see if it works. I’m testing to see if it’s recording the actions the way we’re expecting.” It took days of my focusing on that job full time (it was a large and complex website), but in the end my audit uncovered that we were in fact recording several actions incorrectly in several places, which meant our reporting on those actions was inaccurate and our numbers were therefore also inaccurate. The gross results we were seeing were right, but the nuance was wrong, which in that business was a big deal. So do it manually. Don’t shortcut. Also automate. Compare notes. Experience the magic. Celebrate. </TED_TALK>

Step 3: Analytics Review

Is your data telling you what you think it’s telling you?

General site health check/awareness:

Session duration: How long does it take a newcomer to follow your happy path from above? Are people staying on the site long enough to get through it?

Pages per session: How many pages are in your funnel? Are most people abandoning your site before they get through it?

Exit pages: Where are most people jumping ship? (PRO TIP: high exit pages are ripe for testing. Test sticking a “Why are you leaving?” survey on that page, look at your funnel, check if there’s a lower-commitment way to engage the users to encourage them coming back.

A note about bounce rate: This is a sticky one. It matters and you should know yours, but it should never ever be the metric you use to determine your success or failure. It is, at best, a bell-weather indicator. So how do you integrate it into your reporting hierarchy and what meaning do you make of it? Here are my tips:

Know what your bounce rate is, and what the bounce rate is of your competitive set as a baseline. While they are different from you, it gives you some idea of what is normal and average for your audience.

Use analytics to check the bounce rate by session duration. WHEN do most people bounce? In the first 3 seconds? The first 10 seconds? 15? 20? If it’s super fast and mostly on mobile devices, you probably have a site load time problem. If it’s within 10 seconds, you may have an inbound marketing problem (disconnect between what someone thought your site would show versus what it really did show).

This is where exit surveys can really help you determine why people are leaving. Use them.

People bounce for 2 reasons: either they didn’t find what they were looking for, OR they found EXACTLY what they were looking for and therefore had no incentive to stay. In the first instance, let them go—these are not your people. But identify the demographic so you know who your audience is NOT so you don’t waste time with them. In the second instance, test some ways to entice them to stick around with more similar content. Ecommerce does this with similar products and “people who bought this also bought that” type personalization. Blogs do it with articles and content of a similar theme. Offer incentives, but avoid being too overwhelming/desperate. And if this is a problem for specific pages, make SURE your branding is crystal clear and prominent on those pages. It’s okay if they leave, but make sure they can recognize and remember where they got the useful information so they can make their way back.

Page/page type performance. What are your best performing pages? Worst? Does this match with what you’d expect from your happy path and unhappy path analysis? Any big surprises here? (If you have page types because you have data-driven pages, like product pages or category pages, check the content area for them to get overall performance information.)

Landing page performance. You identified your landing pages from all your primary marketing channels, so check the flow from those pages deeper into your funnel. Where do you lose people?

CTA/Goal performance. This is, of course, the biggest and most obvious piece of data to check. Which CTAs and areas and offers are performing the best? Worst? Not at all? For anything under-performing or not performing, note these as places to test, and consider testing removing them.

Devices. Which devices are your audience using?

Mobile/desktop split? Is your site optimized for the right devices? Review the experience with those devices and make sure it’s smooth.

Browsers. What browser/device combinations make up the majority of your traffic and engagement? Are there any that have higher-than average bounce rates or abandonment rates?

Review the experience on the device/browsers with the worst bounce or abandonment to check that there’s nothing broken.

Why does this matter? Most developers program for and support Google Chrome. Sometimes Firefox. Potentially Safari or Edge. Almost never DuckDuckGo. I understand that there is a fine line to walk with managing your dev team’s resources, so I’m not advocating that you make them optimize for every single browser in existence—don’t try that, you’ll have a bunch of unhappy devs on your hands. What IS important is that you know what browsers your audience is predominantly using and make sure the dev team knows which ones it’s important for them to support and perform testing on. If they’re optimizing for desktop Chrome but 90% of your users are on mobile Safari, there’s a disconnect there. Hopefully there’s nothing broken in a different browser, but it does happen, so check it out.

YOU MADE IT!

Now What?

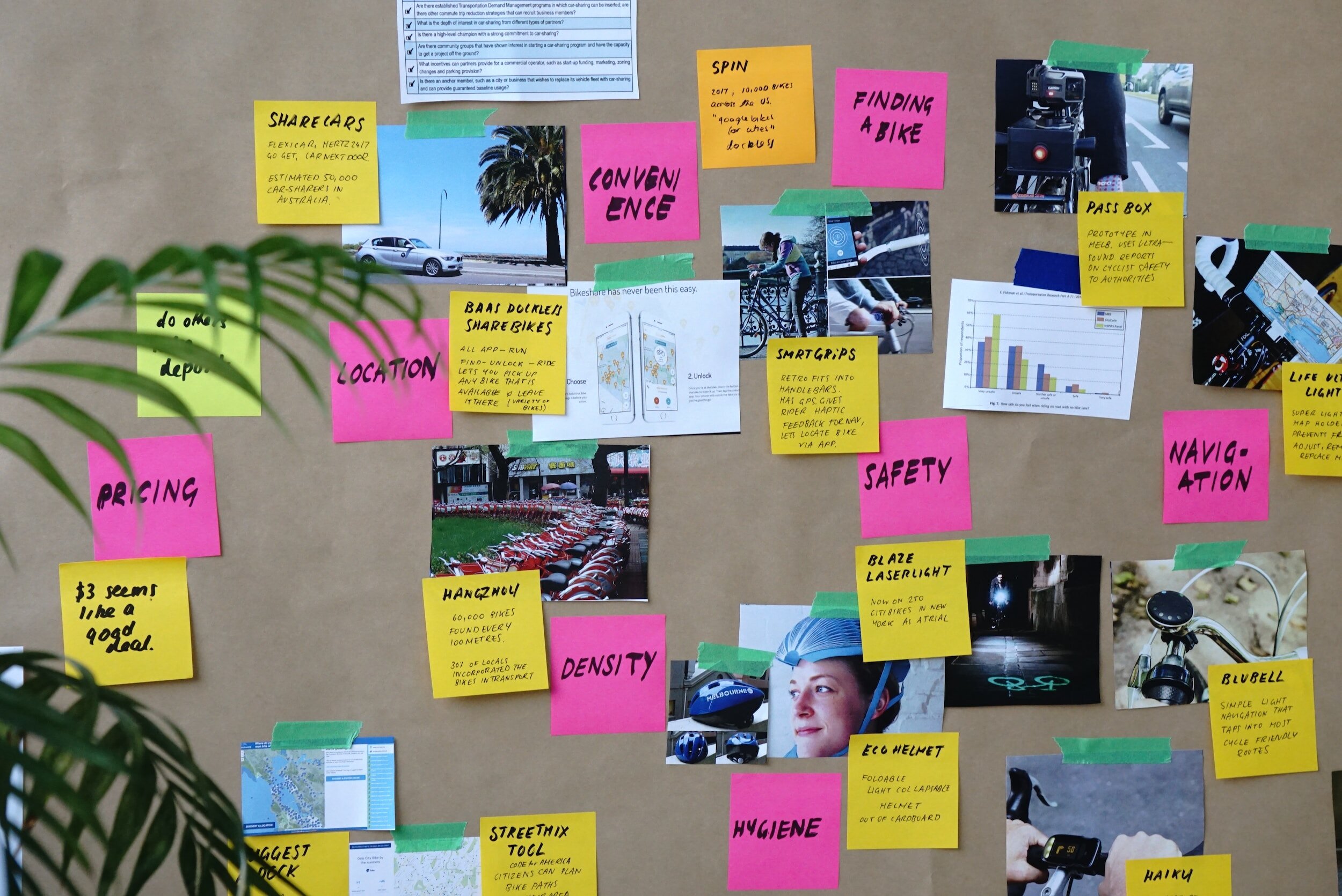

Once you’ve determined that everything is as it should be, functioning and behaving as designed, it’s time to START TESTING. You should have a good checklist of items to explore from the audit. The next best thing to do is honestly to organize your notes into a presentation with your recommendations on where to test and then have a brainstorming session with some available collaborators.

Need Help?

As you can see, auditing is one of our happy places. We love doing audits—filed under “Curiously Satisfying.” If you’re interested in getting an outside perspective or just don’t have the manpower to go through all of the above, reach out and let’s talk—we’d love to help out!